Google has developed an algorithm that can decipher house numbers from Street View images with impressive accuracy. The problem is that the algorithm can match or even outperform humans when confronted with Google’s own use of CAPTCHA tests.

Because so much of the data collection for Google Maps is automated, the first drafts of the maps often lack the ability to match a specific number to a specific building, meaning maps can’t always highlight a particular building (or part of a larger building) in a street.

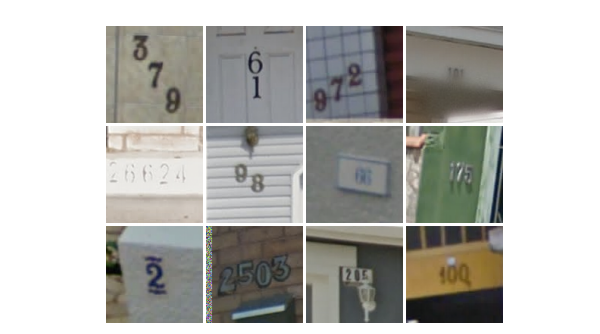

One way round this is to automatically hunt for building numbers that appear in the images captured for the Street View images. That’s not always easy as the numbers on the side of buildings appear in many different typefaces at different sizes and with differing degrees of contrast to the backgrounds.

In a paper published via Cornell University, Google researchers have rethought the way this recognition works. It says traditionally computer systems work on image recognition in three distinct steps: figuring out what part of the image actually houses the characters, breaking it down into manageable sections, then recognizing it piece by piece.

In this case, the researchers instead developed what they call “a deep convolutional neural network that operates directly on the image pixels.” In simpler terms, they tried to develop a system that looks at the entire image and tried to recognize characters in a single process. That’s the technique humans use and has usually been considered as a task computers can’t perform as quickly.

The researchers say they initially tested their algorithm on the Street View House Numbers (SVHN) dataset, a selection of images Google makes publicly available for testing and refining automated recognition tools. The algorithm successfully recognized 96 percent of complete numbers and 97.84 percent of individual digits.

They then turned to the complete Google database, which contains many images considered too difficult to be worth including in SVHN. Here they still managed 90 percent accuracy.

Realizing the technique might work in other areas, the researchers then tried the algorithm on reCAPTCHA, Google’s own system used to distinguish between humans and bots. Not only does reCAPCTAH use letters as well as numbers, but it also distorts the image. The idea is that humans can quickly piece together and reverse-engineer the effects of the distortion, whereas a computer is forced to try out every possible change to get back to the original image and then recognize the characters.

According to the researchers, the algorithm was able to get 99.8% accuracy on the hardest category in the reCAPTCHA test.

Google now concedes that reCAPTCHA as it stands may no longer be sufficient to distinguish between human and machine:

Thanks to this research, we know that relying on distorted text alone isn’t enough. However, it’s important to note that simply identifying the text in CAPTCHA puzzles correctly doesn’t mean that reCAPTCHA itself is broken or ineffective. On the contrary, these findings have helped us build additional safeguards against bad actors in reCAPTCHA.

Understandably Google isn’t detailing these changes, which have involved cooperation between the researchers and the reCAPTCHA department. However, the paper suggests the simplest option is to make the CAPTCHA text longer, something that not only requires more computer resources to automatically crack, but also increases the significance of whatever error rate does exist.

It also suggests a more effective change would be to have the CAPTCHA text sliding around over a static image background, effectively creating a moving target for automated recognition.